Most introductory geology professors teach students about earthquakes by assigning readings and showing diagrams of tectonic plates and fault lines to the class. But Paul Low is not most instructors.

“You guys can go wherever you like,” he tells a group of learners. “I’m going to go over to the epicenter and fly through and just kind of get a feel.”

Low is leading a virtual tour of the Earth’s bowels, directly beneath New Zealand’s south island, where a 7.8 magnitude earthquake struck last November. Outfitted with headsets and hand controllers, the students are “flying” around the seismic hotbed and navigating through layers of the Earth’s surface.

Low, who taught undergraduate geology and environmental sciences and is now a research associate at Washington and Lee University, is among a small group of profs-turned-technologists who are experimenting with virtual reality’s applications in higher education. Early VR programs were about showing students places, say the Louvre or ancient Rome, in low-cost headsets like the Google Cardboard viewer. But these latest iterations go further, creating entire environments—from the subatomic level to the solar system—that students can manipulate. Low and his colleagues at other campuses are trying to shepherd VR educational content from being something “that students look at” to something they can interact with.

For added fun, Low’s virtual persona wears a stormtrooper helmet as he directs learners around the tremor's epicenter.

Uncharted territory

As with any relatively new technology, the path for early adopters of virtual reality in academia is unclear. “It’s pretty much a no-man’s land,” says JD Mills, an innovation systems engineer at Davidson College. He’s worked on a variety of VR projects, including building Pokemon Go tours of campus and creating 3D models of particle-collider data for physics students to interact with.

So how do you get educational materials from textbook to virtual world? First, you need 3D content. Low downloaded models from the Protein Data Bank, for example, and brought them into virtual environments for a biology class. With VR headsets, students can navigate their way through amino acids and hydrogen bonds. “A lot of it is learning how to use the materials that are available,” he says. “The big thing we’re interested in is being able to plug your novel content into VR.”

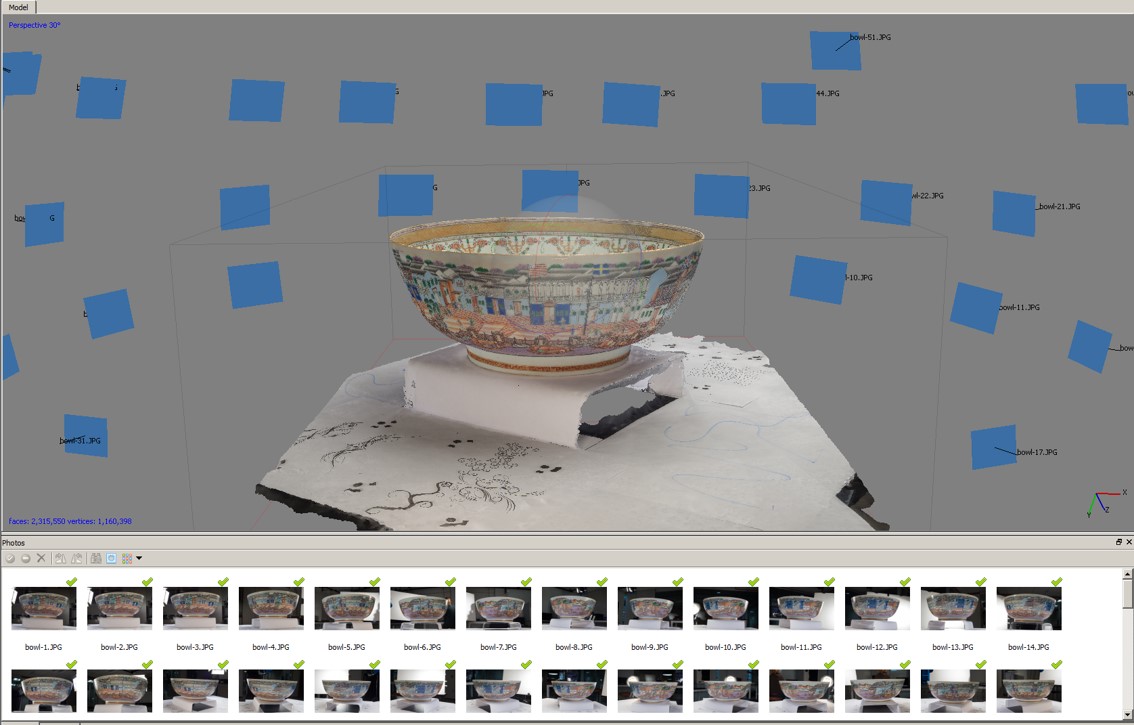

In other cases, he’s created models using handheld scanners that deliver accurate measurements of objects, such as archaeological artifacts and human body parts. The website Sketchfab showcases plenty of objects that have been scanned and modeled in VR, from a mandarin orange to a triceratops skull.

Once professors have 3D content, they can bring it into virtual environments using game-development engines. Low and Mills prefer Unity, a platform behind popular video games. They use the engine to bring 3D assets together into a narrative.

Low recently created a virtual-reality homework assignment for a geology 101 class. He downloaded crystal structures from the American Mineralogist Crystal Structure database, created 3D models of them and used Unity to add interactive components in VR. Students use an HTC Vive system, which includes a headset and hand controllers, to follow a linear progression of activities, similar to playing a video game and moving on to the next level. They finish the assignment once they’ve completed all of the activities.

Washington and Lee University and Davidson have invested in HTC Vive systems, which cost $800 to $1500 once you add hand controllers and other accessories. There are other systems that cost about the same, including the Oculus Rift. Although colleges including Virginia Tech and Stanford have built their own VR systems from scratch, Low and Mills are adamant that the relative affordability and ease of use of consumer-grade VR tech will broaden access. "The expertise on our end comes from a couple of guys who watch a lot of YouTube tutorials, read message boards, dissect example projects, and, occasionally, come up with something original," Low says.

In addition to the monetary investment, these systems also require the physical space for students to move around and explore virtual worlds. “If you get a grant funding a dozen head displays, you need space to walk around, and space is at a premium at any academic setting,” Low says. Right now Washington and Lee students use a computer lab and another classroom.

Dev Support

Unity sees a goldmine in the new crop of academics experimenting with virtual reality for instruction. The company calls this phase of tech adoption “the pioneer moment,” says Clive Downie, chief marketing officer of Unity. “You really just want to give the most powerful tools in the most accessible way and allow them to get on with it.”

He says the company has invested in making the tools easy for anyone—artists, game developers, educators—to pick up and play with. “You have to allow people to experiment. They’re creating the conventions and processes that can form the foundation of how to do things right. If you give too many instructions, you might miss something.”

So far Unity has taken a hands-off approach, avoiding telling people exactly how to use its products. But Downie says in the next 12 to 18 months the company will likely start working with some colleges on curriculum and design principles. “There’s starting to be a large opportunity for us to tap into the leading programs in VR and AR and partner with them in co-creation of content.”

Downie gets excited about the potential to visualize data and “be inside of it” in VR. “It could help whole segments of the population who’ve been challenged by the way math is traditionally taught.”

At Washington and Lee, Low and his colleague David Pfaff are spreading the word about VR to faculty well outside of the STEM departments they typically work with. They’ve strapped body sensors on dance students so that learners can virtually step into a room, and then watch and interact with 3D models of their own skeletons.

Low is excited about creating virtual environments where students and faculty can inhabit the same space and interact, like in the earthquake study. That experience is easier for learning designers to create, he says, because they don’t have to program all of the sequences of events that could potentially happen during a solo activity. Instead, the instructor handles the interactive components on the spot.

Once professors or instructional designers build a virtual fault line or VR archaeological site, “the humans involved have a lifetime of being able to interact with each other there.” That’s the way colleges do it when they build traditional classrooms—one space can be used in class after class for years.